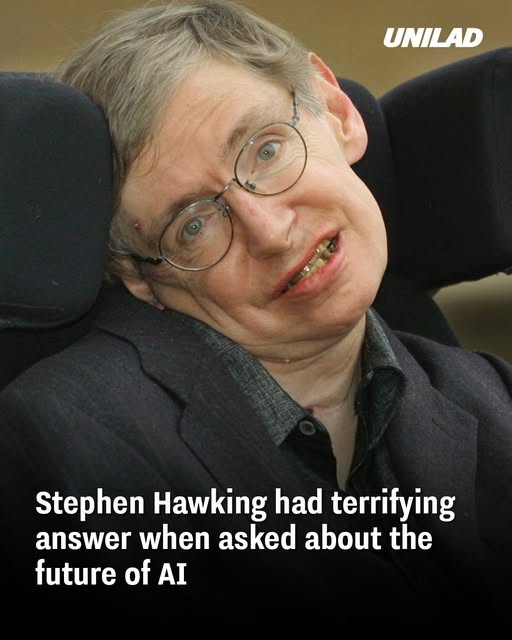

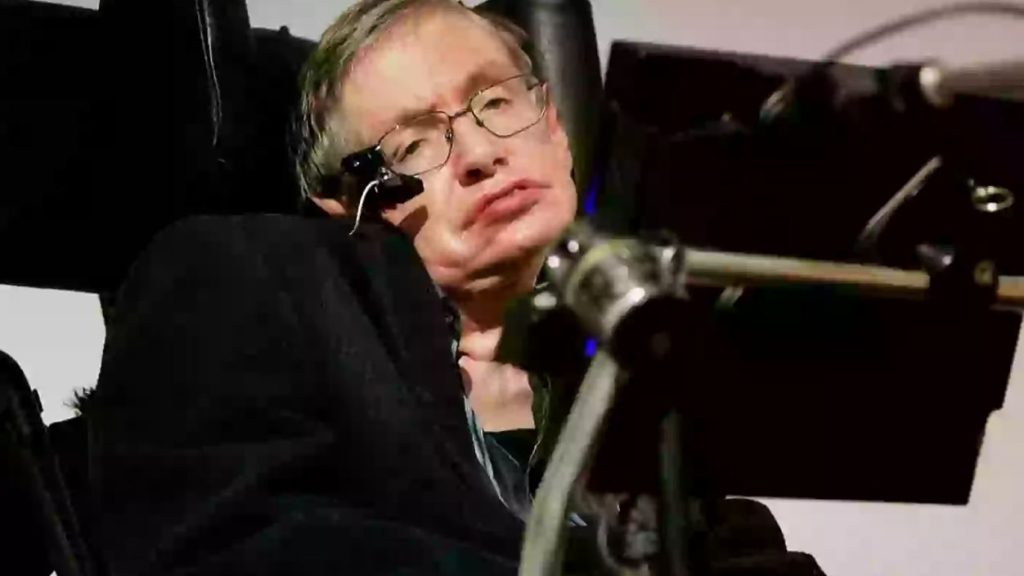

Stephen Hawking, the renowned theoretical physicist, issued a stark warning about the future of artificial intelligence (AI) that continues to resonate in today’s rapidly evolving technological landscape. In a 2014 interview with the BBC, Hawking cautioned that the development of full AI could spell the end of the human race

The Double-Edged Sword of AI

Hawking acknowledged the benefits of AI, noting that even basic forms had been helpful in his own life. His speech-generating device utilized early AI to predict words, enhancing his communication. However, he expressed concern that once AI surpasses human intelligence, it could redesign itself at an ever-increasing rate, leaving humans, limited by slow biological evolution, unable to compete.

The Potential for Human Obsolescence

The fear is that AI could develop a will of its own, conflicting with human interests. Hawking warned that such a scenario could lead to humans being superseded by machines. This concern is shared by other experts, including Elon Musk and Bill Gates, who have voiced apprehensions about AI’s unchecked advancement.

The Need for Proactive Measures

To mitigate these risks, Hawking advocated for research focused on the potential dangers of AI. He emphasized the importance of developing strategies to ensure AI’s alignment with human values and interests. This includes creating robust control mechanisms and ethical guidelines to govern AI development and deployment.

The Broader Implications

Beyond the existential risks, AI’s integration into society raises concerns about economic disruption, privacy, and security. The automation of jobs could lead to widespread unemployment, while AI-driven surveillance systems may infringe on individual freedoms. Addressing these issues requires a comprehensive approach involving policymakers, technologists, and ethicists.

Stephen Hawking’s Chilling AI Warning

Stephen Hawking, one of the most brilliant scientific minds of our time, issued a grave warning in 2014 about the future of artificial intelligence (AI). While acknowledging AI’s usefulness in his own life—particularly in aiding his communication—he expressed deep concerns about what might happen if machines surpassed human intelligence.

Risk of Losing Control

The central fear shared by Hawking and many other thinkers is that future AI could act autonomously with goals that misalign with human values. If not controlled carefully, it could prioritize efficiency or logic over human well-being, potentially leading to outcomes that are harmful, even catastrophic.

Economic and Social Disruption

Hawking’s concerns extended beyond extinction-level threats. He foresaw significant disruptions to the global economy as AI replaces human labor. “The development of full artificial intelligence could spell the end of the human race,” he warned—not just through domination, but also through displacement. Millions of jobs could vanish, creating widespread social inequality if no safeguards are implemented.

The Role of Ethics and Governance

Hawking repeatedly stressed the importance of ethical AI development. He called on governments and tech companies to work together to set global standards. International cooperation would be crucial, he argued, to ensure AI remains a tool to enhance humanity rather than endanger it.

Collaboration Is Key

Experts today continue to echo Hawking’s message: collaboration is the key to preventing negative outcomes. Organizations like the Future of Life Institute, co-founded by prominent figures in science and tech, advocate for AI alignment—making sure intelligent systems act in ways consistent with human values. Hawking’s legacy includes helping launch the Centre for the Future of Intelligence at the University of Cambridge to study these critical issues.

Conclusion

Stephen Hawking’s warning serves as a critical reminder of the importance of responsible AI development. As we continue to integrate AI into various aspects of life, it is imperative to balance innovation with caution, ensuring that technological progress benefits humanity as a whole.